Agentic Rules Enforcer

Arnica’s Agentic Rules Enforcer puts security guardrails where they matter most: at the moment code is generated. By automatically enforcing version-controlled secure coding rules across every AI coding agent and repository, Arnie prevents risk before it ever reaches review.

The Challenge with Securing AI-Driven Code

AI models are trained on vast amounts of public code, including vulnerable patterns, outdated practices, and misconfigurations, making insecure output the default unless guardrails are enforced.

Traditional security tools operate at PR or CI stages, after AI-generated code is already written, reviewed, and re-prompted, creating costly rework and slowing down devs.

Organizations lack centralized, enforceable controls to ensure every AI coding tool follows the same security and compliance rules across repositories, teams, and languages.

In AI coding, it's difficult and inconsistent to automatically identify and fix code risks – such as SAST, SCA, licenses, IaC, low reputation packages – before they are pushed to any feature branch.

Secure AI-Generated Code by Default

Prevent Risk at the Point of Code Generation

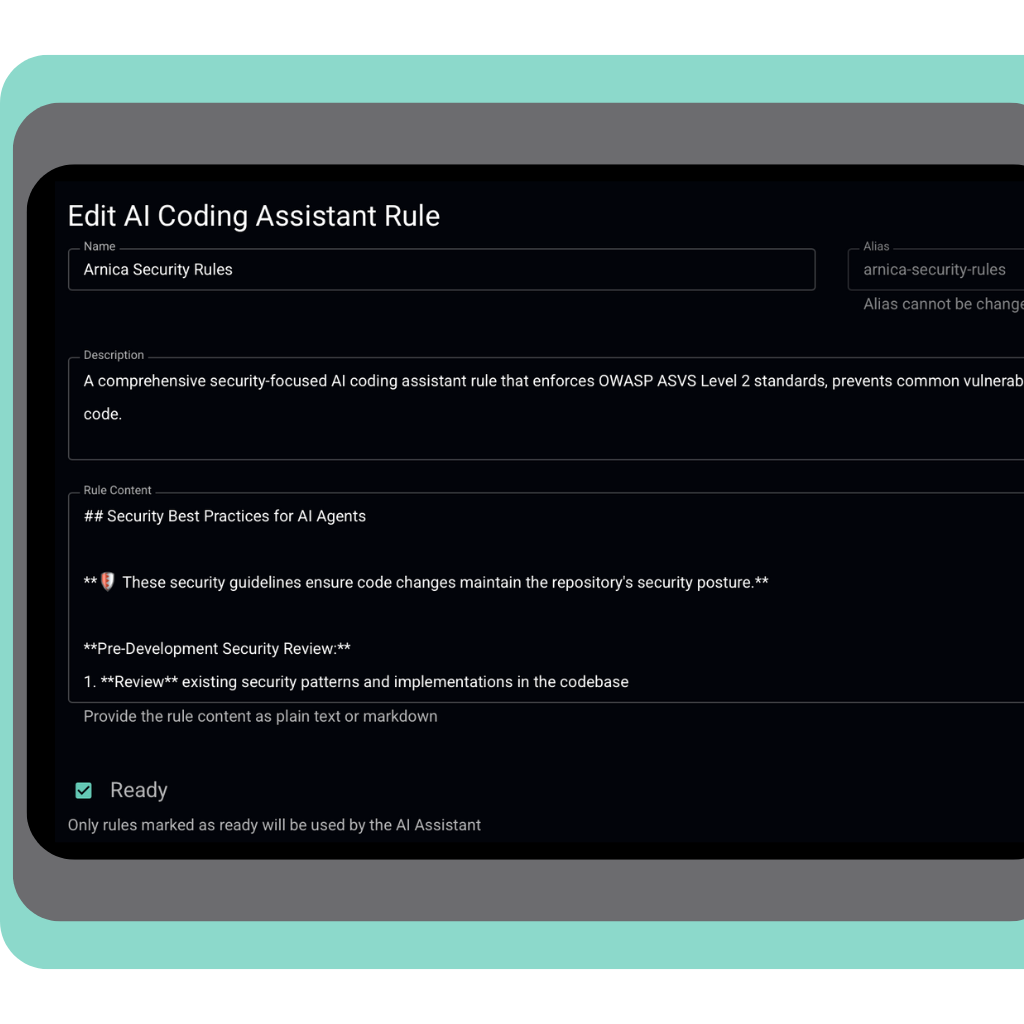

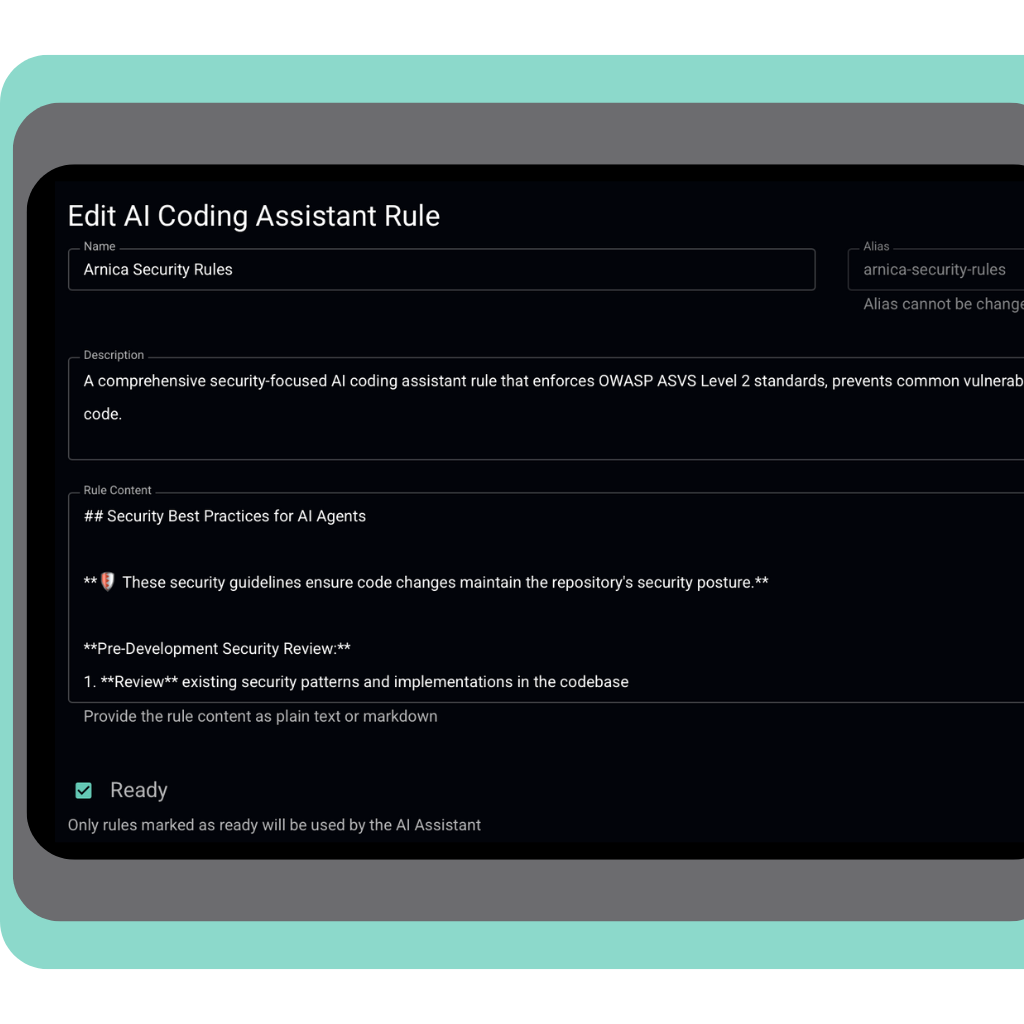

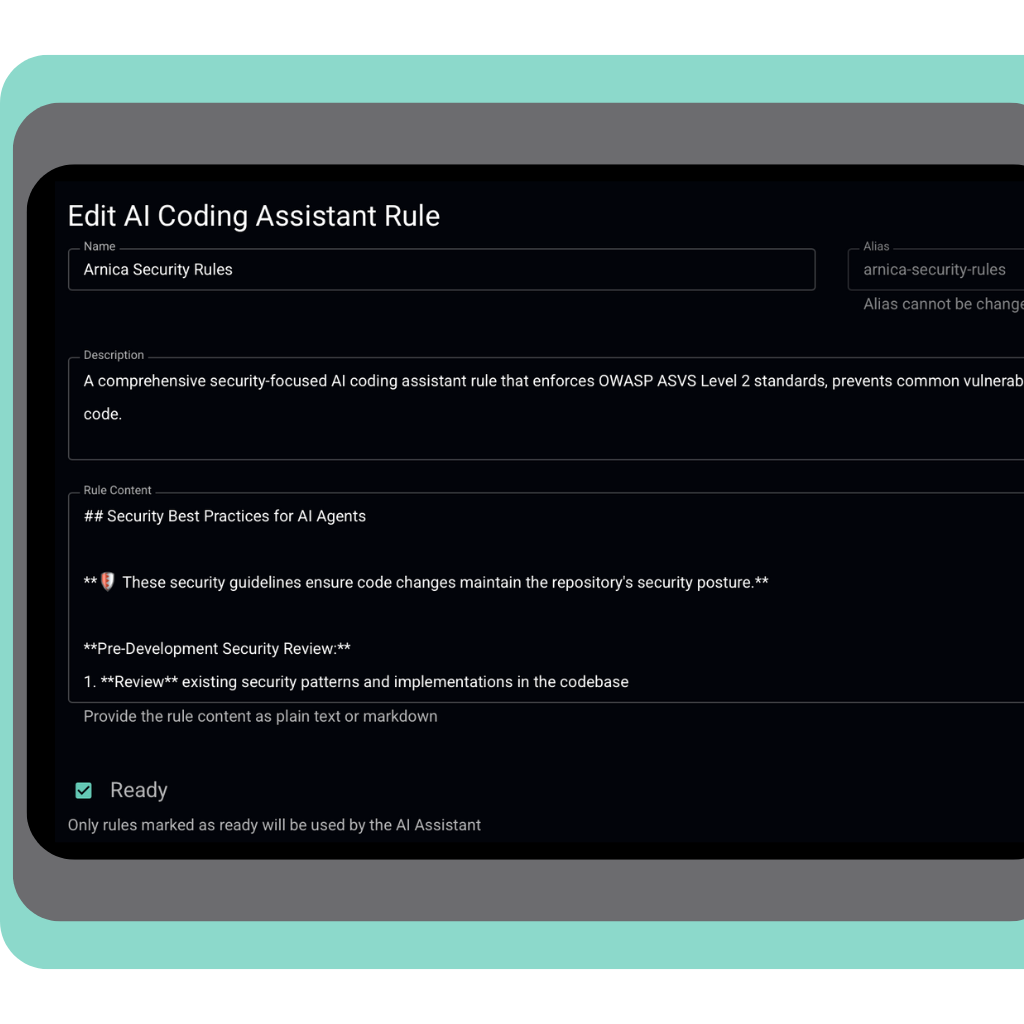

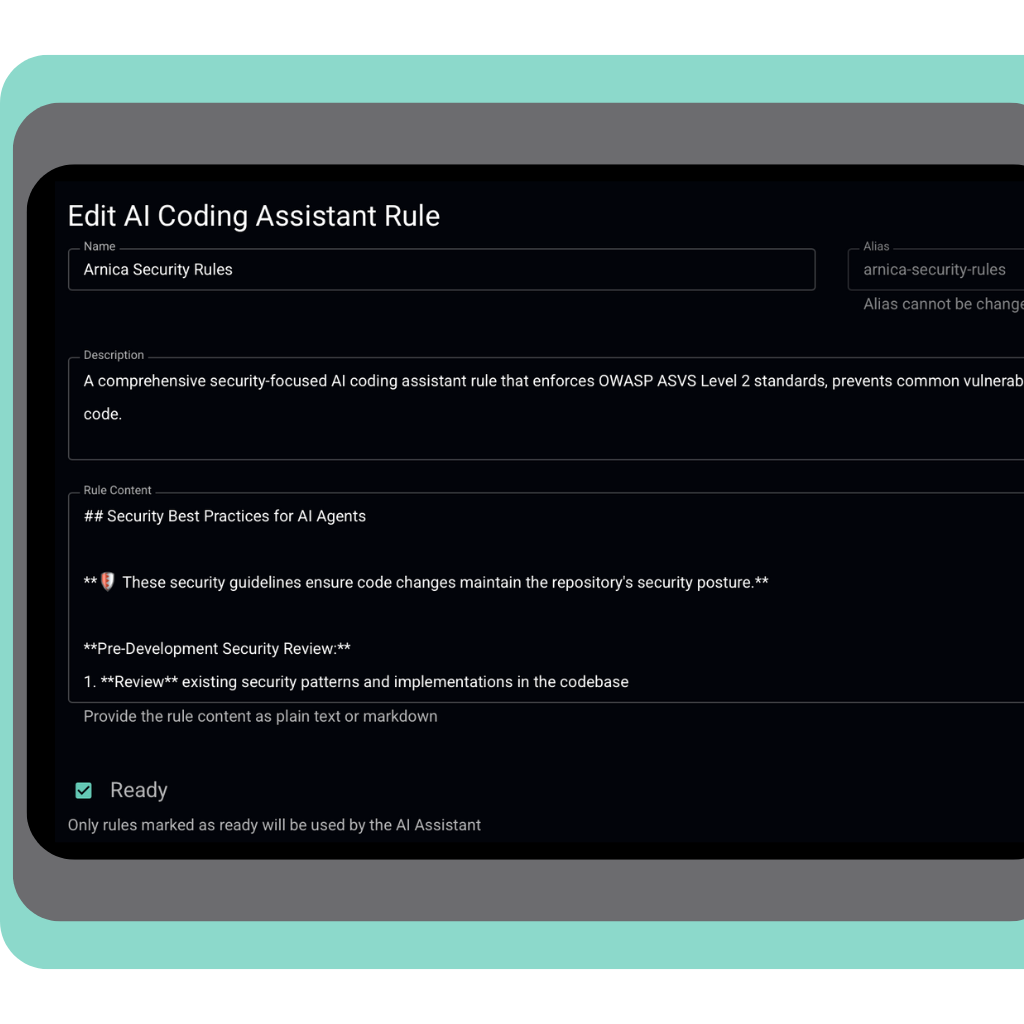

Arnica's Agentic Rules Enforcer injects code rules directly into coding tools like Claude, Cursor, and Copilot, enforcing security at the point of code prevention.

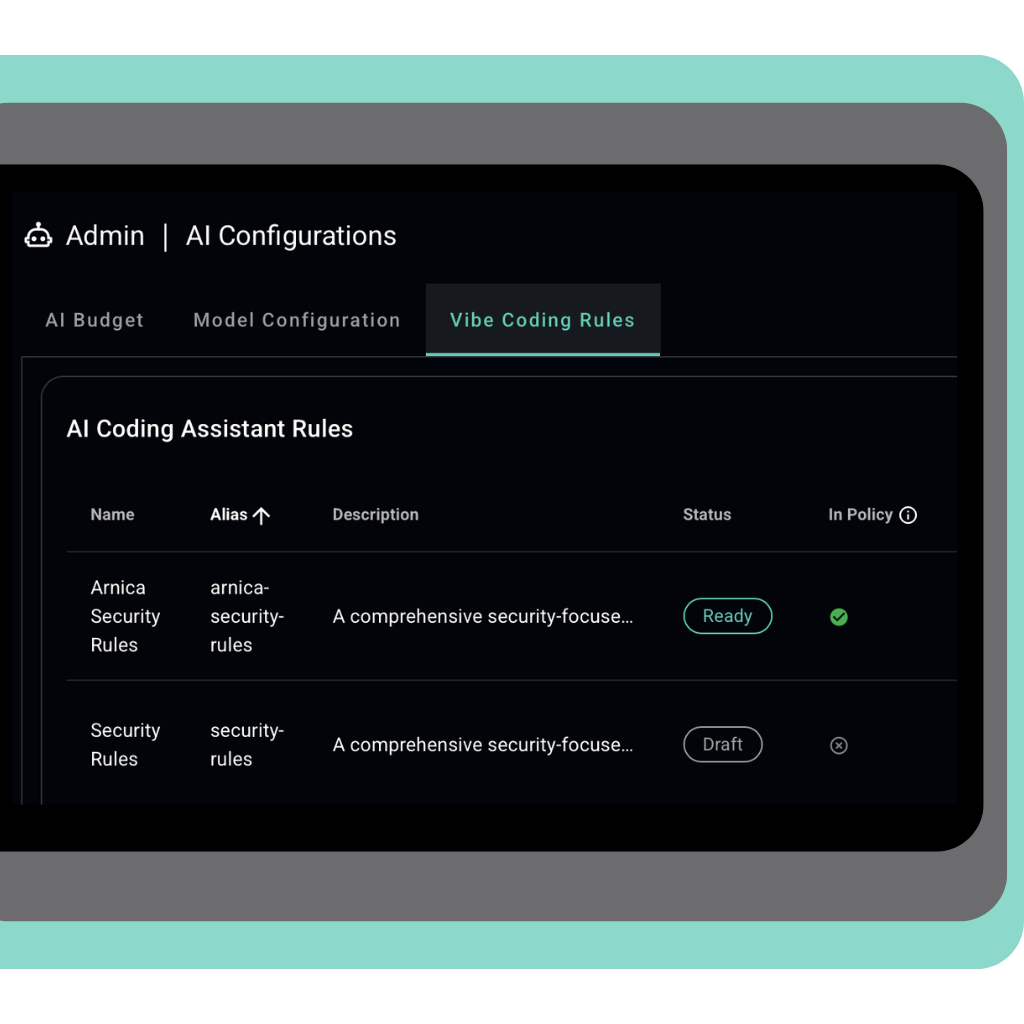

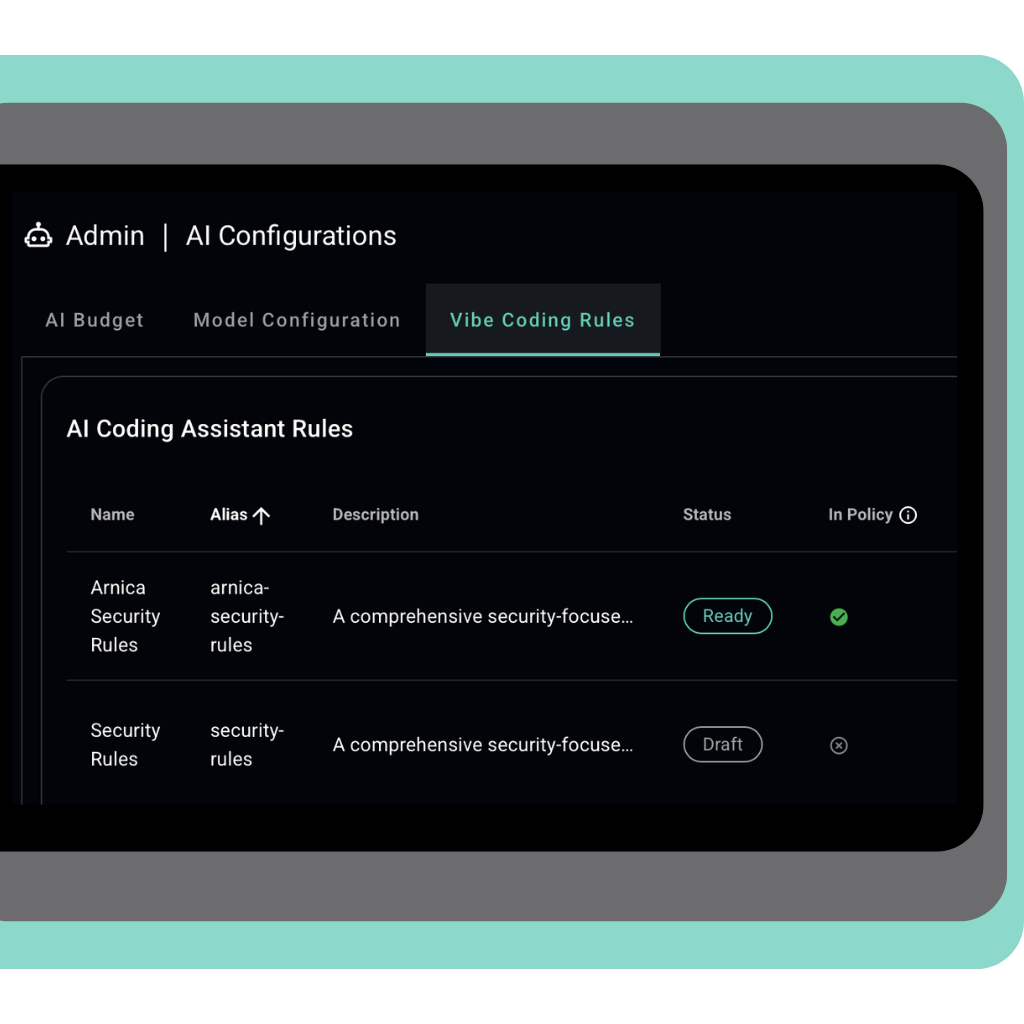

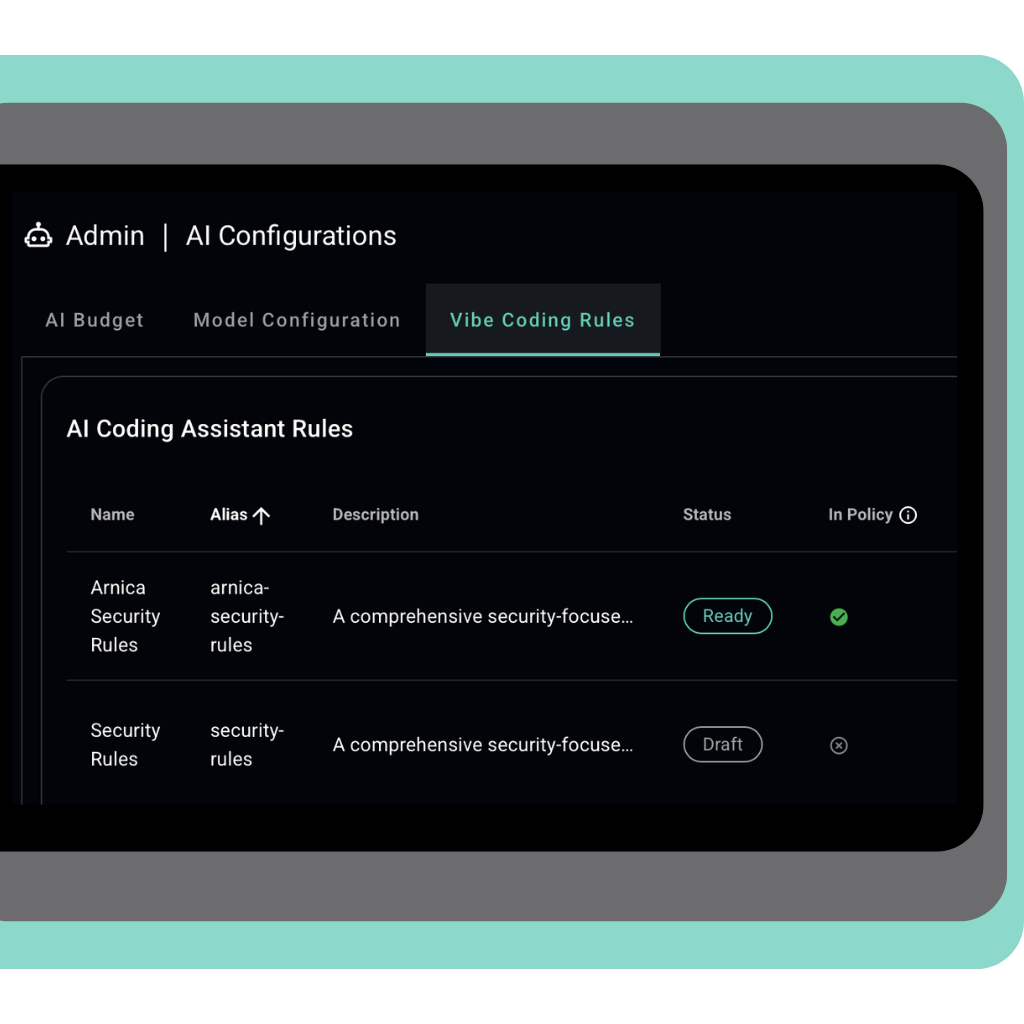

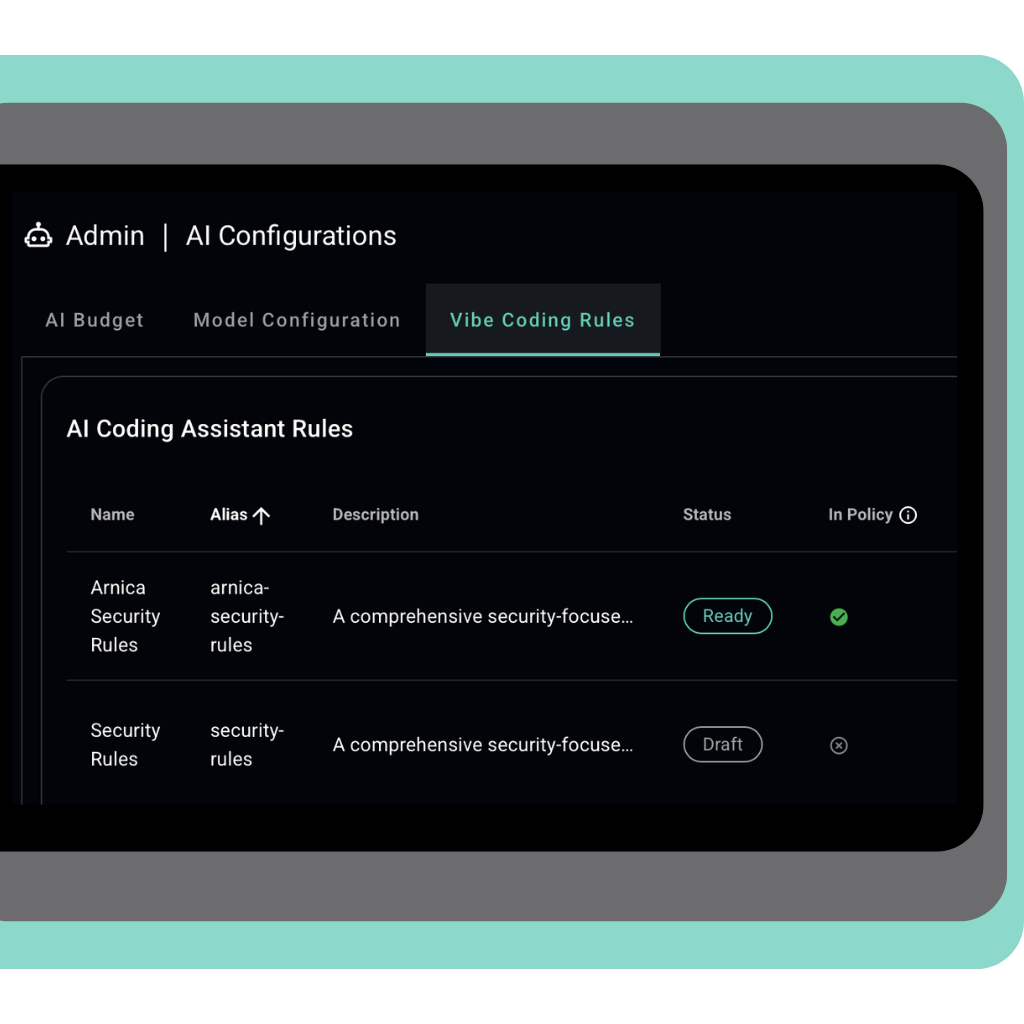

Centralized, Enterprise-Grade AI Governance

Utilize organization-wide policy enforcement to ensure that your entire enterprise is covered from AI-driven risks.

See Container Image Mapping in Action.

Pipelineless, Agent-Native Enforcement

Enforce version-controlled secure coding rules directly inside AI coding agents that developers use every day.

Customer testimonials

Hear what Arnica users have to say about how Arnica's code security capabilities helped them build their own world-class application security program.

Secure AI code by default in an insecure AI world.

Get full pipelineless coverage across your AI coding tools in minutes.